38 soft labels machine learning

[2007.03212] Soft Labeling Affects Out-of-Distribution Detection of ... Soft labeling becomes a common output regularization for generalization and model compression of deep neural networks. However, the effect of soft labeling on out-of-distribution (OOD) detection, which is an important topic of machine learning safety, is not explored. In this study, we show that soft labeling can determine OOD detection performance. How to Label Data for Machine Learning: Process and Tools - AltexSoft Data labeling (or data annotation) is the process of adding target attributes to training data and labeling them so that a machine learning model can learn what predictions it is expected to make. This process is one of the stages in preparing data for supervised machine learning.

Robust Machine Reading Comprehension by Learning Soft labels In this paper, we propose a robust training method for MRC models to address this problem. Our method consists of three strategies, 1) label smoothing, 2) word overlapping, 3) distribution prediction. All of them help to train models on soft labels. We validate our approach on the representative architecture - ALBERT.

Soft labels machine learning

[2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. Learning Soft Labels via Meta Learning - Apple Machine Learning Research Learning Soft Labels via Meta Learning. One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. Creating targets for machine learning labels - Python Programming for ... The next tutorial: Machine learning against S&P 500 company prices - Python Programming for Finance p.12. Intro and Getting Stock Price Data - Python Programming for Finance p.1. Go. Handling Data and Graphing - Python Programming for Finance p.2. Go. Basic stock data Manipulation - Python Programming for Finance p.3.

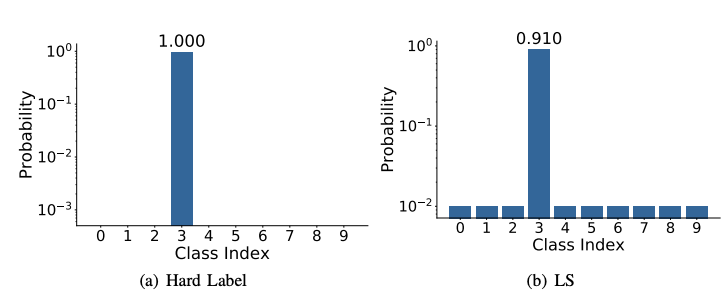

Soft labels machine learning. What is the definition of "soft label" and "hard label"? According to Galstyan and Cohen (2007), a hard label is a label assigned to a member of a class where membership is binary: either the element in question is a member of the class (has the label), or it is not. A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership ... Labelling Images - 15 Best Annotation Tools in 2022 Labelling Images: It is more complex than labelling and classifying images. It is easier to be conducted than image annotation. It needs a larger scale to work on most efficiently. It is effective in smaller scales as well, unlike image annotation. It is used for a specific purpose of machine learning, and for a specific audience or algorithm. Label Smoothing Explained - Papers With Code Label Smoothing is a regularization technique that introduces noise for the labels. This accounts for the fact that datasets may have mistakes in them, so maximizing the likelihood of log p ( y ∣ x) directly can be harmful. Assume for a small constant ϵ, the training set label y is correct with probability 1 − ϵ and incorrect otherwise. ARIMA for Classification with Soft Labels - Medium SUMMARY. In this post, we introduced a technique to carry out classification tasks with soft labels and regression models. Firstly, we applied it with tabular data, and then we used it to model time-series with ARIMA. Generally, it is applicable in every context and every scenario, providing also probability scores.

scikit-learn classification on soft labels - Stack Overflow Generally speaking, the form of the labels ("hard" or "soft") is given by the algorithm chosen for prediction and by the data on hand for target. If your data has "hard" labels, and you desire a "soft" label output by your model (which can be thresholded to give a "hard" label), then yes, logistic regression is in this category. Data Labeling Software: Best Tools for Data Labeling - Neptune The labeling process is optimized for text formats and text-based operations, to create specialized datasets for text-based AI. At its core, the tool is a Natural Language Processing (NPL) text annotation tool. It also provides a platform to manage the work of labeling the text manually, take in machine learning models to optimize the task, and ... Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise). Second, we propose a simple but highly effective method to overcome both synthetic and real-world noisy labels. Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

Multi-Label Text Classification and evaluation | Technovators Survey on Multi-Label Text Classification using NLP and Machine Learning. In this article, we'll look into Multi-Label Text Classification which is a problem of mapping inputs ( x) to a set of ... PDF Efficient Learning with Soft Label Information and Multiple Annotators Note that our learning from auxiliary soft labels approach is complementary to active learning: while the later aims to select the most informative examples, we aim to gain more useful information from those selected. This gives us an opportunity to combine these two 3 approaches. 1.2 LEARNING WITH MULTIPLE ANNOTATORS What is the difference between soft and hard labels? - reddit 1 comment 90% Upvoted Sort by: best level 1 · 5 yr. ago Hard Label = binary encoded e.g. [0, 0, 1, 0] Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. 5 More posts from the learnmachinelearning community 601 Posted by 2 days ago Tutorial Binary classification with soft labels - Best Machine Learning Projects Binary classification with soft labels. Follow the full discussion on Reddit. Hello everyone, I am kinda new in field and I am having trouble trying to build a CNN that performs detection of a certain type of event in a image using soft labels.

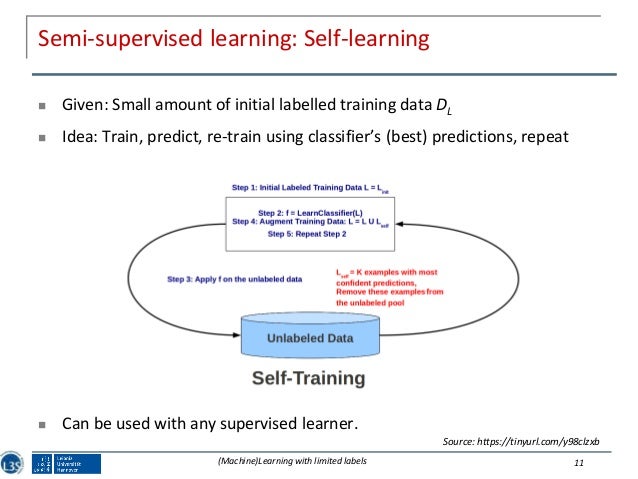

Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards.

Is it okay to use cross entropy loss function with soft labels? I have a classification problem where pixels will be labeled with soft labels (which denote probabilities) rather than hard 0,1 labels. Earlier with hard 0,1 pixel labeling the cross entropy loss ... Cross Validated is a question and answer site for people interested in statistics, machine learning, data analysis, data mining, and data ...

Semi-Supervised Learning With Label Propagation Next, let's explore how to apply the label propagation algorithm to the dataset. Label Propagation for Semi-Supervised Learning. The Label Propagation algorithm is available in the scikit-learn Python machine learning library via the LabelPropagation class.. The model can be fit just like any other classification model by calling the fit() function and used to make predictions for new data ...

Information Gain Propagation: A New Way to Graph Active Learning with ... Our key innovations are: i) relaxed queries where a domain expert (oracle) only judges the correctness of the predicted labels (a binary question) rather than identifying the exact class (a multi-class question), and ii) new criteria of maximizing information gain propagation for active learner with relaxed queries and soft labels.

The Ultimate Guide to Data Labeling for Machine Learning In machine learning, if you have labeled data, that means your data is marked up, or annotated, to show the target, which is the answer you want your machine learning model to predict. In general, data labeling can refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing.

How to Develop Voting Ensembles With Python - Machine Learning Mastery Voting is an ensemble machine learning algorithm. For regression, a voting ensemble involves making a prediction that is the average of multiple other regression models. In classification, a hard voting ensemble involves summing the votes for crisp class labels from other models and predicting the class with the most votes. A soft voting ensemble involves summing the predicted probabilities ...

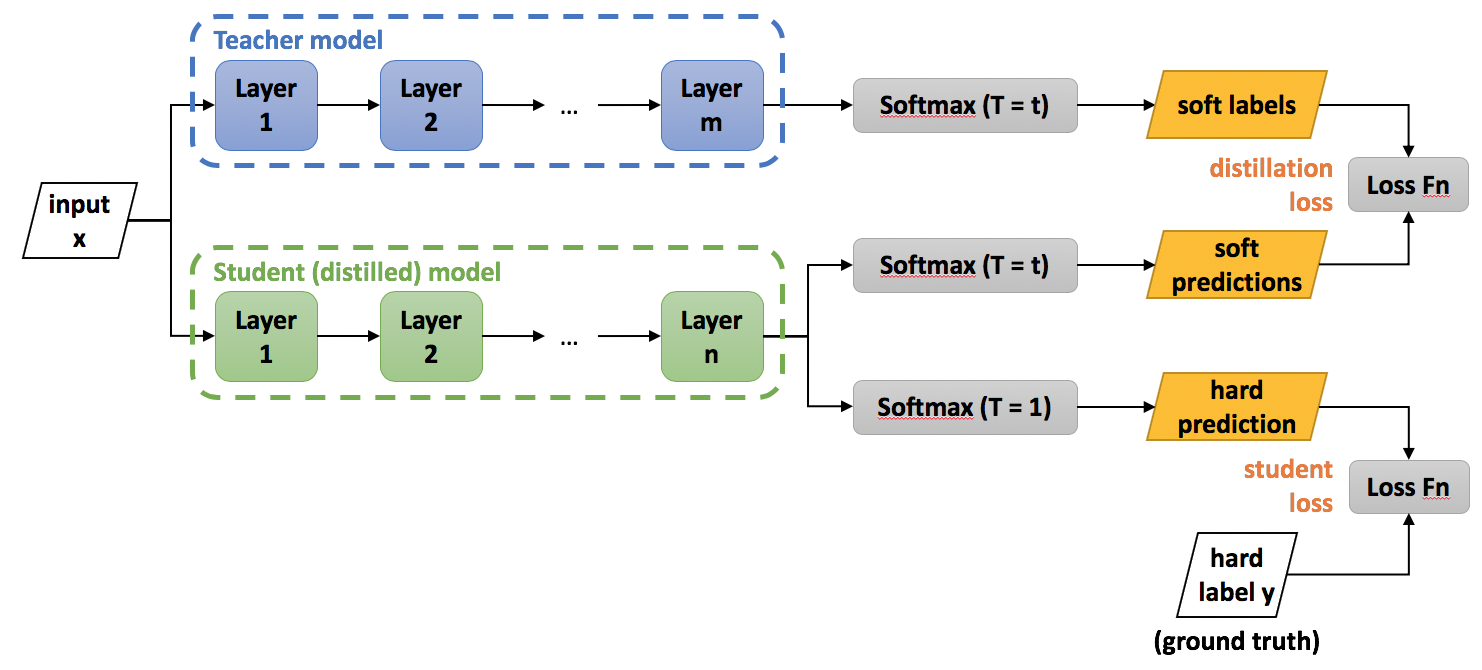

What is Label Smoothing? - Towards Data Science Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y

Eliciting and Learning with Soft Labels from Every Annotator The labels used to train machine learning (ML) models are of paramount importance. Typically for ML classification tasks, datasets contain hard labels, yet learning using soft labels has been shown to yield benefits for model generalization, robustness, and calibration.

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

Soft Labels Transfer with Discriminative Representations Learning for ... Request PDF | Soft Labels Transfer with Discriminative Representations Learning for Unsupervised Domain Adaptation | Domain adaptation aims to address the challenge of transferring the knowledge ...

PDF Robust Machine Reading Comprehension by Learning Soft labels capability on generalization due to the label sparseness problem. In this paper, we propose a robust training method for MRC models to address this problem. Our method consists of three strategies, 1) label smoothing, 2) word overlapping, 3) distribution prediction. All of them help to train models on soft labels.

Creating targets for machine learning labels - Python Programming for ... The next tutorial: Machine learning against S&P 500 company prices - Python Programming for Finance p.12. Intro and Getting Stock Price Data - Python Programming for Finance p.1. Go. Handling Data and Graphing - Python Programming for Finance p.2. Go. Basic stock data Manipulation - Python Programming for Finance p.3.

Learning Soft Labels via Meta Learning - Apple Machine Learning Research Learning Soft Labels via Meta Learning. One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

English Learning Machine Speech Recognition Children Educational Toys Pink/Blue-in Learning ...

[2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Post a Comment for "38 soft labels machine learning"